There arn’t many articles out there that discuss Azure Data Factory design patterns. One big concern I’ve encountered with customers is that there appears to be a requirement to create multiple pipelines/activities for every table you need to copy. This conjures up images of massive, convoluted data factories that are a nightmare to manage.

Whilst the ForEach functionality has existed in ADF V2 for a while now, it’s recently been enhanced to enable mass copying of tables without having to specify a target Linked Services and datasets for each table.

In this simple post I’m going to show you how to get started with this new functionality.

Using the Copy Data tool (and the Pipeline Editor), you can specify your target data source, then select multiple source tables. When you specify the target data source, Data Factory generates a ForEach activity that in turn calls a Copy activity that’s parameterized for each source table.

To get started we need to have an Azure Data Factory created, along with a Source and Target. In this example, I’ve used the Azure SQL Database with the sample AdventureWorks database and Azure Blob Storage as my target. See the following for assistance in getting setup –

Create An Azure Blob Storage Account

With that all done, we launch our newly created Data Factory from the Azure portal, and select the Copy Data wizard –

Which takes us to our Copy Data wizard. Fill in the the Task name and leave the rest as is.

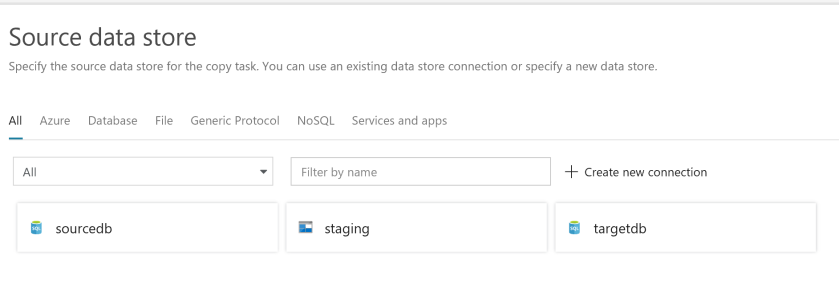

Click Next, and click “Create New Connection” to create our Source Linked Service –

For this example, choose “Azure SQL Database” –

With your Linked Service chosen, populate your source details (Server, Credentials etc) from the SQL Database you created earlier and click “Continue”. This takes you to the “Select Tables” screen, and select all the tables you wish to copy –

Define your destination data store in the same way as you created the source data store. In this example I’m using Azure Blob Storage as part of an ELT (Extract, Load & Transform) pipeline, and is called “staging” in my example. It’s my storage account which will act as the landing/staging area for incoming data.

In your Destination Linked Service, complete the necessary details as prompted. As I’m using Blob Storage I simply have to designate the path, which in this instance is my “raw” container within my landing area. If it was a database source this would ask for me to map source tables to target tables.

Finish the wizard and run the completed pipeline –

Once completed, we can see in our blob storage landing area that all my files have been successfully copied.

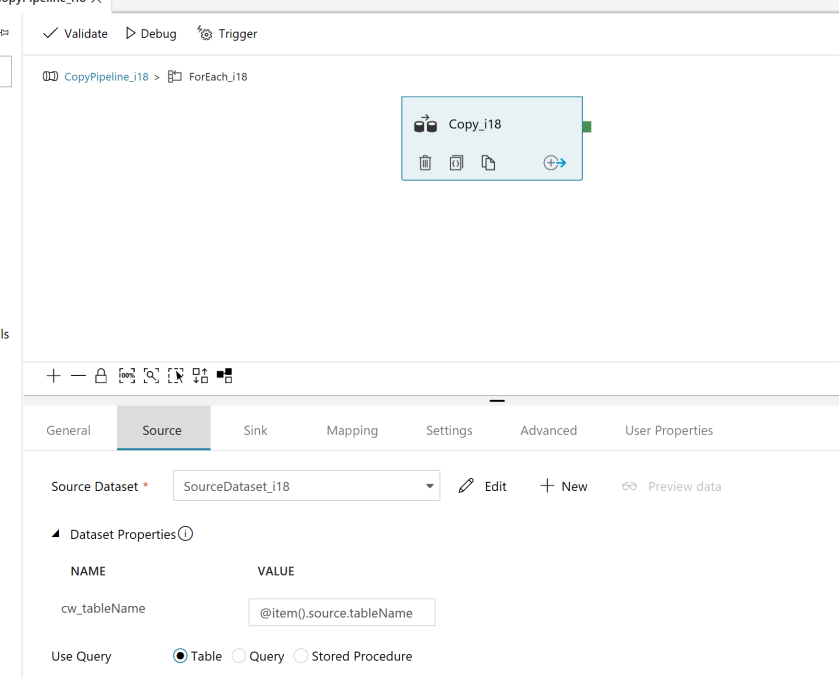

So far, so amazing, right? The even better bit is when we go to view the Data Factory pipeline we created, and instead of seeing loads of Copy activities, we simply see one, innocuous ForEach activity –

Expanding “Activities” shows us there’s just been one “Copy” activity created, and is in fact parameterized with the relevant values (source table, target destination etc) being passed as a JSON array.

Which then instructs our Copy activity what to do –

And we’re done. Much simpleness. For those of you have gone through the pain of creating endless packages in SSIS for simple copy/1:1 data flows, and have previously been creating similar amounts of Copy activities in Data Factory, hopefully this new release will make your ETL/ELT development a much simpler process.

Happy Factory-ing!

Can we map columns like one table to two target tables based on some condition. Without store proc in Azure data factory?

LikeLike

I have a different issue. My data is already in Blob Storage and now I want to load it to SQL Data Warehouse by using ADF. But it is loading only one table for each pipeline. Can you tell me how can I load multiple tables from Blob Storage to SQL DW with one pipeline activity?

LikeLike

Hi,

I am having a different scenario here. I am trying to load tables from blob storage to Azure SQL Data warehouse. I want to load them all using one pipleline but it is allowing only 1 table per one pipeline. could you please provide me the solution for it?

Thanks,

LikeLike